Fearless Flappers

Here’s my spin on a modern rite-of-passage for beginners in machine learning: Building a flappy bird clone.

Time-Warp:

What’s going on here?

A population of birds tries to navigate the course. The more successful birds are more likely to reproduce. Small mutations affect the behaviour of offspring.

First, the game environment was created. This is:

- The physics that affect the bird - gravity and flap mechanics

- The obstacles and collision logic

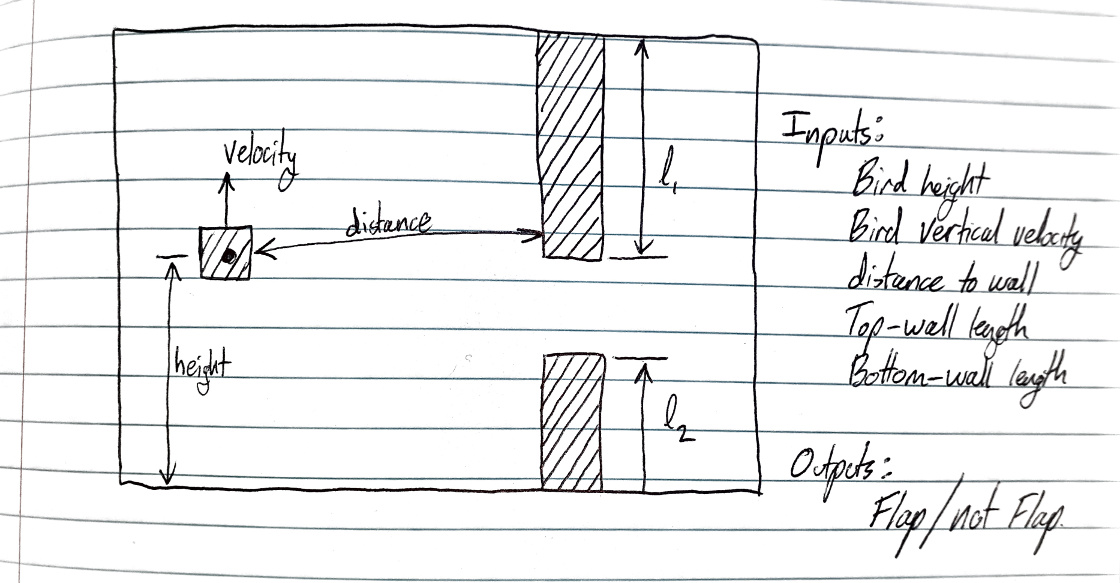

Next, control of each bird is handed over to a randomly-initialised neural network, which is fed information from the game enviroment. The neural network is dense (fully-connected) and has:

- 5 Inputs: Bird height & vertical speed, distance to the pipes, length of top & bottom pipe.

- 1 hidden layer with 8 neurons.

- 1 Output: Flap.

The population is trialled all at the same time, and performance is measured by how many pixels the bird makes it to the right.

If a bird hits a pipe it is killed off, with its performance logged. Better performing birds are more likely to pass their genetics (neural net) to the next generation, with small mutations.

A diagram of inputs and outputs for the neural network

References

- Toy Neural Network - The Coding Train